What am I up to? We’re in an exciting moment where there’s no question about the transformative potential of generative AI tools, but there are questions about whether today’s tools are realizing that transformation—whether they’re improving organizational productivity or the quality of work we collectively create. As a Senior Applied Scientist embedded in Microsoft’s Copilot Analytics team, I analyze in-the-wild usage of AI tools to quickly get to the truth about what’s working and what’s not, and redesign systems to more effectively help individuals and organizations achieve more with AI. In doing this work, I care about ensuring that AI systems work with us (rather than replace us) and that they expand our capacity to make, to wonder, to learn, to connect, and to express.

Broadly, my research focuses on developing computational interventions (agents, mechanisms, workflows) that help collectives (organizations, communities, publics) accomplish more, together—plus evaluative tools and experiments to understand how collectives respond to computational intervention. My work primarily advances the fields of human-computer interaction (HCI) and artificial intelligence, by taking advantage of insights from fields that study collective behavior (social psychology, sociology, organizational behavior).

Previously, I completed my PhD in Human-Computer Interaction from Carnegie Mellon University, where I worked with Chinmay Kulkarni and Geoff Kaufman. Before that, I completed my undergraduate studies in Electrical Engineering from IIT Kharagpur. Along the way, I also spent time doing HCI research at Microsoft Research (Human Understanding and Empathy Group and Multilingual Systems Group) and Stanford HCI.

Find me: Seattle, WA prkhadpe@microsoft.com @pranavkhadpe

Khadpe, P.*, Xu, O.*, Kaufman, G., & Kulkarni, C. (2025). Hug Reports: Supporting Expression of Appreciation between Users and Contributors of Open Source Software Packages. In Proceedings of the ACM on Human-Computer Interaction (CSCW 2025). (* denotes equal contribution)

Google Award for Inclusion Research • PDF

Bali, S., Khadpe, P., Kaufman, G., & Kulkarni, C. (2023). Nooks: Social Spaces to Lower Hesitations in Interacting with New People at Work. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI 2023).

Honorable Mention Award (top 5%) • PDF • SCS News

Khadpe, P., Kulkarni, C., & Kaufman, G. (2022). Empathosphere: Promoting Constructive Communication in Ad-Hoc Virtual Teams through Perspective-Taking Spaces. In Proceedings of the ACM on Human-Computer Interaction (CSCW 2022).

PDF

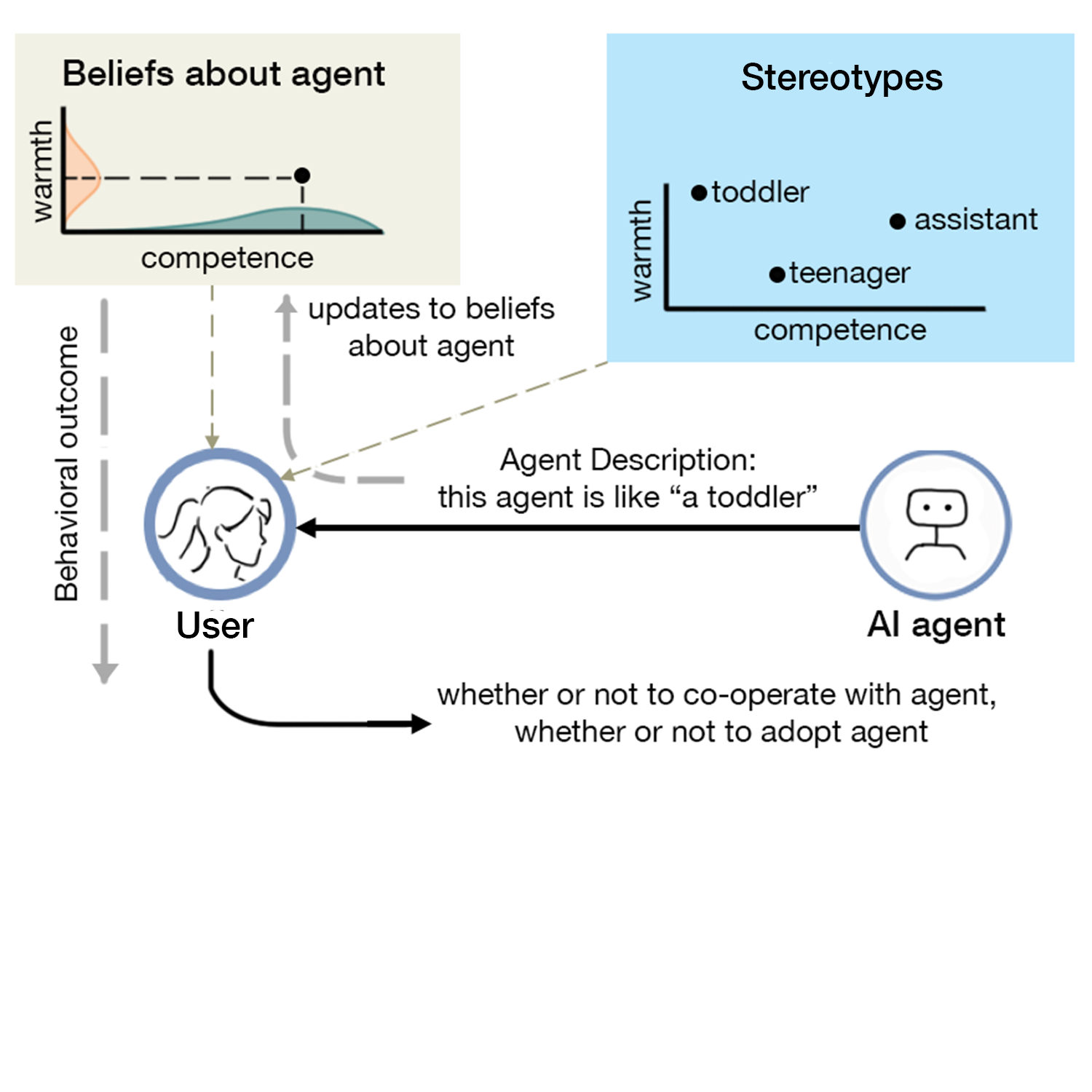

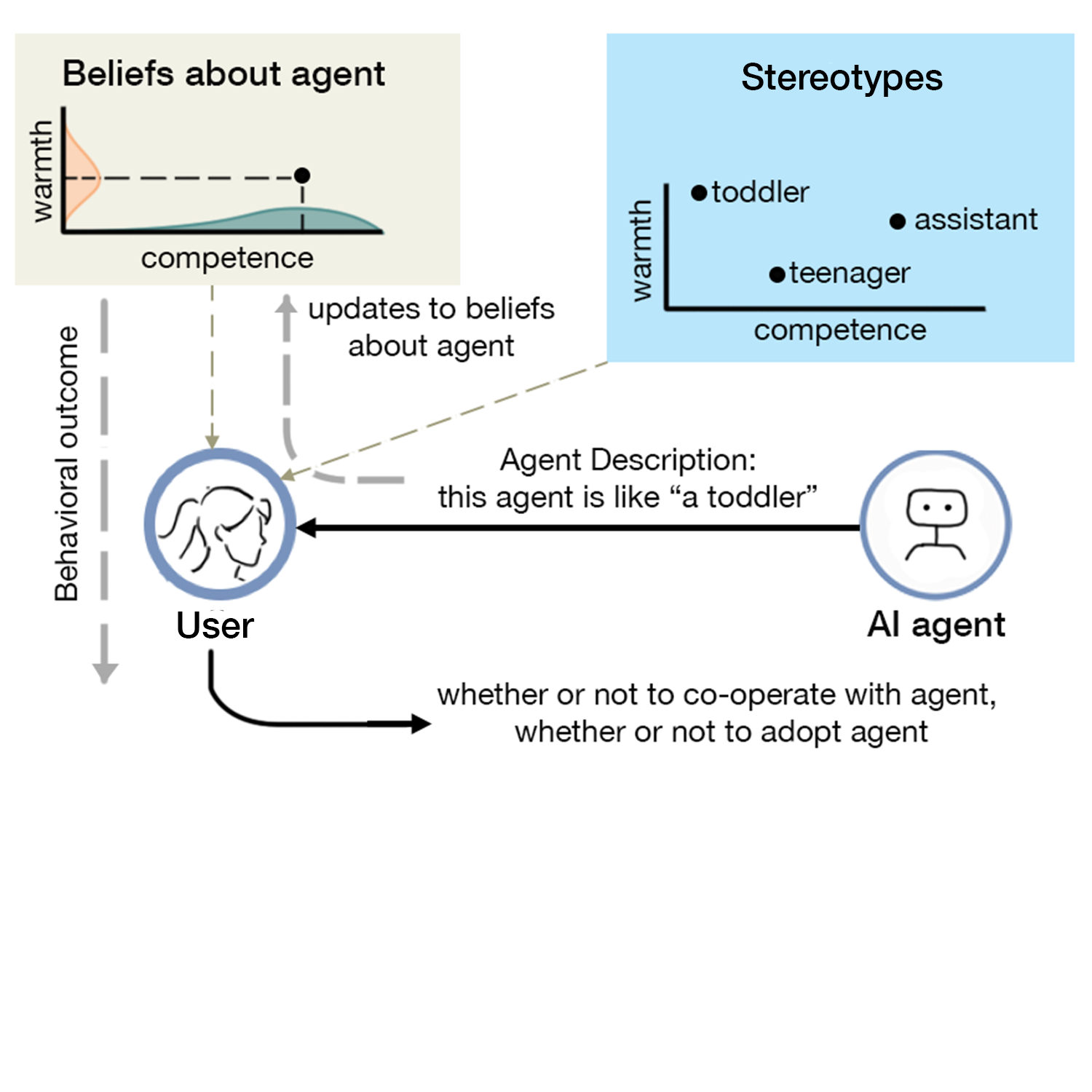

Khadpe, P., Krishna, R., Fei-Fei, L., Hancock, J., & Bernstein, M. (2020). Conceptual Metaphors Impact Perceptions of Human-AI Collaboration. In Proceedings of the ACM on Human-Computer Interaction (CSCW 2020).

Honorable Mention Award (top 5%) • PDF • WSJ • Stanford HAI Blog